“By harnessing the power of an FPGA and a simple camera, you can create your very own digit recognition system from the comfort of your own desktop.

In the world of artificial intelligence (AI), the recognition of handwritten digits proves that you got your neurons right and in working condition. This application of AI is already quite old – its breakthrough came in 1989 when a reliable machine-enabled parsing of ZIP codes for postal services was achieved. Soon after, it was proven that multi-layer feed-forward networks can implement any function. Shortly, financial institutions adopted the technique for the automatic parsing of account numbers on remittance slips for wire transfers or bank checks. Today the recognition of handwritten digits using different AI-techniques is also famous in academia for teaching and learning purposes.

Recognition of Handwritten Digits is Complex

While the presentation of the problem itself has not changed over the years, computing power has increased dramatically during recent decades, making it now possible to run the recognition on an FPGA (Field Programmable Gate Array) board suitable for teaching, together with a small digital camera. Nevertheless, it is still one of the harder tasks for an artificial intelligence to tackle. Handwritten digits differ in size, width, and orientation, and each person’s handwriting has distinct variations. Different cultures even write digits differently. There are, for example, subtle differences on how Americans and Europeans write the digits 1, 4, or 7.

Another area raising digit recognition issues are the similarities between some digits: 3 and 8, 0 and 8, 5 and 6, 2 and 7, etc. Last but not least, the quality of the image itself has a large influence. If not properly trained, the AI will incorrectly classify too many digits for the final product to be of any use. Trying to implement such a recognition system using the classical programming approach will get the programmer in a lot of trouble with a lot of details and special cases.

Implementing a Textbook Version

But how does the recognition work? Looking at it from a very high-level, it’s straightforward: An image of the digit needs to be captured, processed and analyzed, and the result presented to the user.

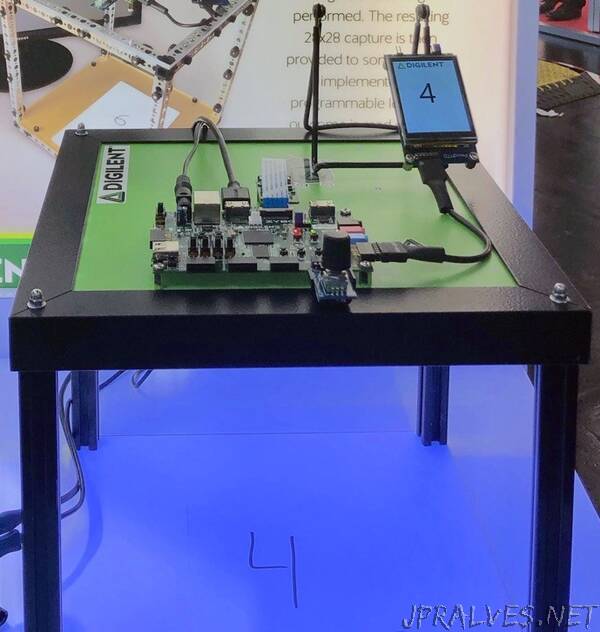

Performing a more thorough analysis, it soon becomes obvious that many more tasks have to be executed and problems solved. The hardware for such a system is not the real obstacle. Digilent, for example, implemented a textbook-version of the digit recognition through artificial intelligence as a proof-of-concept using their 5 megapixel Pcam 5C fixed-focus color camera module for the image capture, their Zybo Z7 Xilinx Zynq-7000 ARM/FPGA development board, and its Pmod MTDS multi-touch display system as user interface and to display the result of the recognition process (the whole setup is available at a discount in our Embedded Vision Bundle).”