“A project that incorporates Computer Vision in tandem with Bolt IoT. Get ready to control LEDs using just your fingers!

Ever since the advent of the computers in the late 1990s, technology has been moving forward at an unprecedented rate. The development of newer technology has always been done with one thing in mind-how can we make a particular task easier to do than before? This project also follows a similar path.

If we trace the journey of lighting back to its beginning, people used to have lanterns that used the light of the fire inside it to illuminate the things around them. When electricity was discovered and started getting used for home appliances, we had the filament bulb. Due to its power wastage, we switched to the CFL(Compact Fluorescent Lamp) bulbs. Then we moved on to an even more energy-efficient source, being the LEDs(Light Emitting Diodes). We have reached a stage now where we have voice-controlled LED bulbs being used in homes. Taking a step forward from here, in this project we explore the possibility of controlling LED bulbs in our homes using just our hands. The number of fingers we hold up decides how many bulbs light up!

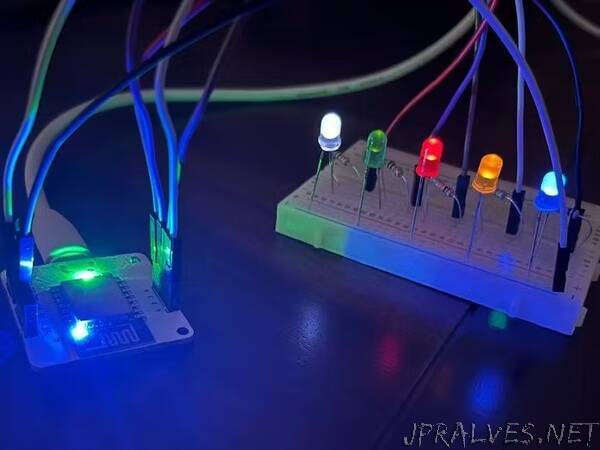

This project explores the realm of Computer Vision and tries to implement this in an IoT(Internet of Things) scenario. To achieve this, we make use of 5 LEDs(Light Emitting Diodes) and try to control them using just our fingers. To achieve this, I have used the OpenCV and Mediapipe libraries in Python.

Hardware Setup

We will use the Bolt IoT WiFi Module to link our software(being the finger detection code that we will write) and the hardware(being the LEDs).

The Fritzing breadboard diagram is given below. The circuit is very simple to understand. We are connecting the positive ends of each of the 5 LEDs in series with a resistor to the pins 0 to 4 respectively. The negative ends of all the LEDs are grounded. The resistors are used simply to prevent excessive current from passing through the LEDs, which might damage them.

Software Setup

We will use the OpenCV and the MediaPipe libraries in Python to implement the coding section of our project. To detect how many fingers are open, the program has to first detect a hand in what it sees. To facilitate this we create a different Python file and write the algorithms and classes for hand detection. These classes are shown in the pictures below(Figure 1 and Figure 2). Figure 3 shows the keypoint localization performed by the program on a hand shown in the webcam. We import this file into our main program and move on to finger detection.

Now that we have managed to detect a hand, we need to devise an algorithm to find out how many fingers are open. To do this, MediaPipe has an in-built hand landmark detection model that classifies the different landmarks of our hand into different points. The image below shows this exact classification.

Now, taking the example of the index finger, we can simply say that if the y-coordinate of point 8 is greater than that of point 6, that finger is open. We can similarly implement the same algorithm for all the other fingers and detect which fingers are open and which are not.

Conclusion

Voila! We have built a system of LEDs that can be controlled by our fingers only. This is obviously a small-scale setup that employs 5mm LEDs and a webcam for showing our hands. Using more sophisticated tools and software, we can scale this up to a large system. For instance, we can control the lights of a room by showing our hand, giving home automation a whole new twist. The possibilities are limitless!”