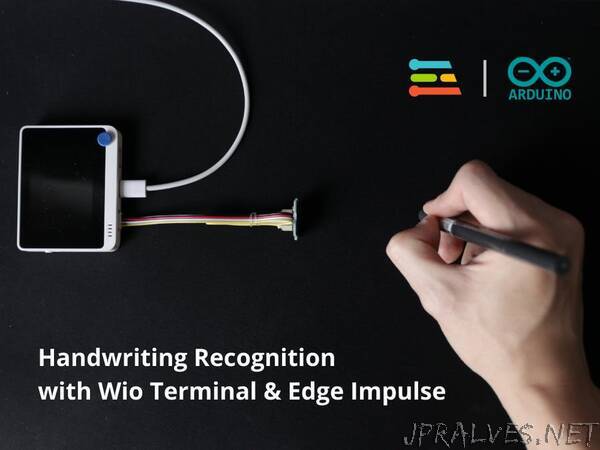

“Using just a single ToF sensor, use machine learning to train and implement a handwriting gesture recognition device on the Wio Terminal.

In today’s tutorial, I’ll show you how you can build a machine learning based handwriting recognition device with the Wio Terminal and Edge Impulse. Follow this detailed guide to learn how a single time of flight sensor can allow you to recognise handwriting gestures and translate them to text!

In this comprehensive tutorial, we will cover:

- Key Concepts: Time-of-Flight Sensors, Machine Learning & TinyML

- Setting up the development environment

- How to Perform Data Collection with Edge Impulse

- Designing, Training, Evaluating & Deploying the Machine Learning model with Edge Impulse

- Implementing Live Inferences on the Wio Terminal with Arduino

- Potential Improvements to this Project

Project Overview: Handwriting Recognition

Handwriting recognition has consistently been a popular field of development since digitalisation began to occur on a global scale. One common use case that you might be familiar with is the conversion of handwritten text into computer readable formats through optical character recognition, or OCR.

In addition to camera based systems, there are many other ways to achieve handwriting recognition, such as with the touchscreens on our mobile phones and tablets, or movement based tracking with inertial measurement units (IMUs). However, the project that we will be covering today will be far simpler than that – we will use nothing but a single time of flight sensor to recognise handwriting gestures.

Let’s first have a look at a video demonstration of the project in action.”