“We are continuing our exploration of Machine Learning on a giant tiny device, the Seeed XIAO BLE Sense. And now, classifying sound waves.

Introduction

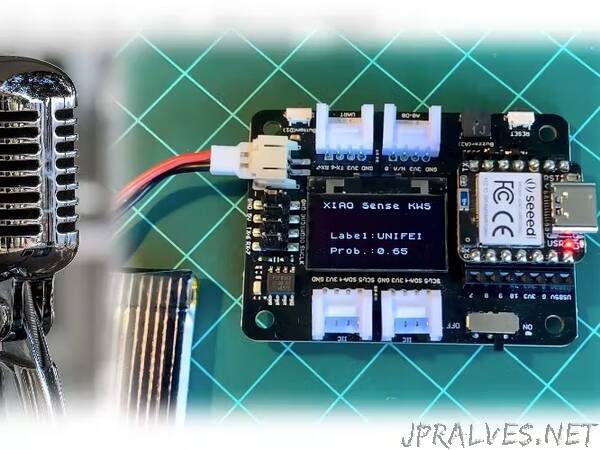

In my tutorial, TinyML Made Easy: Anomaly Detection & Motion Classification, we explored Embedded Machine Learning, or simply, TinyML, running on the robust and still very tiny device, the Seed XIAO BLE Sense. In that tutorial, besides installing and testing the device, we explored motion classification using real data signals from its onboard accelerometer. In this new project, we will use the same XIAO BLE Sense to classify sound, explicitly working as “Key Word Spotting” (KWS). A KWS is a typical TinyML application and an essential part of a voice assistant.

But, how a voice assistant works?

For starting, it is essential to realize that Voice Assistants on the market, like Google Home or Amazon Echo-Dot, only react to humans when they are “waked up” by particular keywords such as “ Hey Google” on the first one and “Alexa” on the second.

Stage 1: A smaller microprocessor inside the Echo-Dot or Google Home continuously listens to the sound, waiting for the keyword to be spotted. For such detection, a TinyML model at the edge is used (KWS application).

Stage 2: Only when triggered by the KWS application on Stage 1 is the data sent to the cloud and processed on a larger model.

In this project, we will focus on Stage 1 ( KWS or Keyword Spotting), where we will use the XIAO BLE Sense, which has a digital microphone that will be used to spot the keyword.

If you want to go deeper on a full project, plese see my tutorial: Building an Intelligent Voice Assistant From Scratch, where I Emulate a Google Assistant on a RaspberryPi and Arduino Nano 33 BLE.”