“As we scale AI and machine learning to work on a broader set of tasks for enterprise and industry applications, it is imperative to learn more from less. Data augmentation is one important tool, especially in situations where there isn’t enough training data, that improves learning by synthesizing new training samples automatically. Such is the case for few-shot learning, where only one or very few samples are available per category. Most prior work on few-shot classification for images investigates the ‘single label’ scenario, where every training image contains only a single object and hence has a single category label. However, a more challenging and realistic scenario is multi-label, few-shot image classification where training data has a small number of samples, and images have more than one label, which has not been explored extensively in prior work.

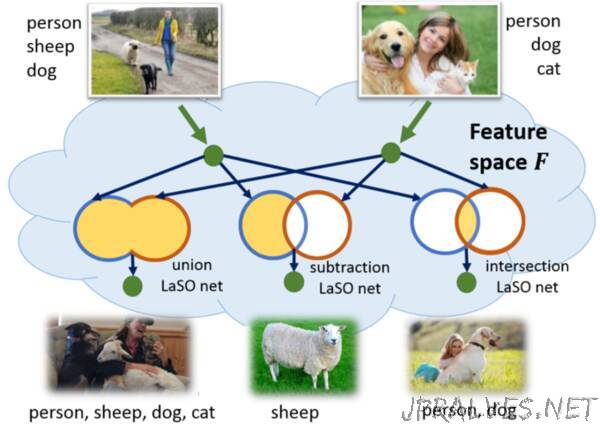

In order to advance this topic, we investigate multi-label, few-shot image classification in our paper presented at IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2019) in June 2019. The paper, titled “LaSO: Label-Set Operations networks for multi-label few-shot learning,” proposes a new method to train deep neural networks by combining pairs of image samples with certain sets of labels to synthesize new samples with ‘merged’ labels. As an example, consider the two images in Figure 1, one depicting ‘a person walking a sheep and a dog’ and another depicting ‘a person holding a dog and a cat’. The labels of the first image are ‘person,’ ‘sheep,’ and ‘dog,’ and the second are ‘person,’ ‘dog,’ and ‘cat.’ Given these two images, the LaSO networks synthesize novel training samples corresponding to operations that perform union, intersection and subtraction of labels. The ‘union’ produces a sample labeled ‘person,’ ‘dog,’ ‘cat,’ and ‘sheep,’ while ‘intersection’ and ‘subtraction’ produce samples labeled ‘person,’ ‘dog,’ and ‘sheep’ alone, respectively. The LaSO networks operate directly in the feature space learned by a deep neural network.”