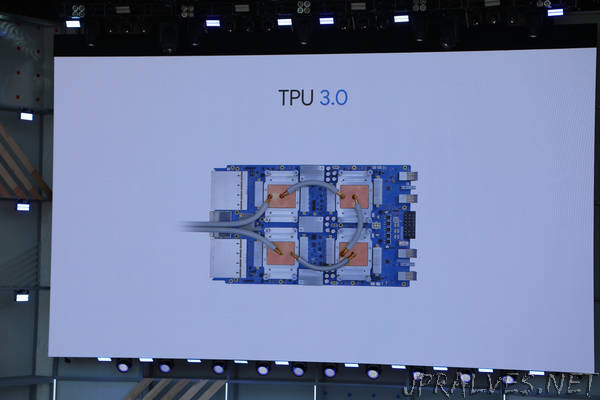

“As the war for creating customized AI hardware heats up, Google announced at Google I/O 2018 that is rolling out out its third generation of silicon, the Tensor Processor Unit 3.0.

Google CEO Sundar Pichai said the new TPU pod is eight times more powerful than last year, with up to 100 petaflops in performance. Google joins pretty much every other major company in looking to create custom silicon in order to handle its machine operations. And while multiple frameworks for developing machine learning tools have emerged, including PyTorch and Caffe2, this one is optimized for Google’s TensorFlow. Google is looking to make Google Cloud an omnipresent platform at the scale of Amazon, and offering better machine learning tools is quickly becoming table stakes.

Amazon and Facebook are both working on their own kind of custom silicon. Facebook’s hardware is optimized for its Caffe2 framework, which is designed to handle the massive information graphs it has on its users. You can think about it as taking everything Facebook knows about you — your birthday, your friend graph, and everything that goes into the news feed algorithm — fed into a complex machine learning framework that works best for its own operations. That, in the end, may have ended up requiring a customized approach to hardware. We know less about Amazon’s goals here, but it also wants to own the cloud infrastructure ecosystem with AWS.

All this has also spun up an increasingly large and well-funded startup ecosystem looking to create a customized piece of hardware targeted toward machine learning. There are startups like Cerebras Systems, SambaNova Systems, and Mythic, with a half dozen or so beyond that as well (not even including the activity in China). Each is looking to exploit a similar niche, which is find a way to outmaneuver Nvidia on price or performance for machine learning tasks. Most of those startups have raised more than $30 million.

Google unveiled its second-generation TPU processor at I/O last year, so it wasn’t a huge surprise that we’d see another one this year. We’d heard from sources for weeks that it was coming, and that the company is already hard at work figuring out what comes next. Google at the time touted performance, though the point of all these tools is to make it a little easier and more palatable in the first place.

Google also said this is the first time the company has had to include liquid cooling in its data centers, CEO Sundar Pichai said. Heat dissipation is increasingly a difficult problem for companies looking to create customized hardware for machine learning.

There are a lot of questions around building custom silicon, however. It may be that developers don’t need a super-efficient piece of silicon when an Nvidia card that’s a few years old can do the trick. But data sets are getting increasingly larger, and having the biggest and best data set is what creates a defensibility for any company these days. Just the prospect of making it easier and cheaper as companies scale may be enough to get them to adopt something like GCP.

Intel, too, is looking to get in here with its own products. Intel has been beating the drum on FPGA as well, which is designed to be more modular and flexible as the needs for machine learning change over time. But again, the knock there is price and difficulty, as programming for FPGA can be a hard problem in which not many engineers have expertise. Microsoft is also betting on FPGA, and unveiled what it’s calling Brainwave just yesterday at its BUILD conference for its Azure cloud platform — which is increasingly a significant portion of its future potential.

Google more or less seems to want to own the entire stack of how we operate on the internet. It starts at the TPU, with TensorFlow layered on top of that. If it manages to succeed there, it gets more data, makes its tools and services faster and faster, and eventually reaches a point where its AI tools are too far ahead and locks developers and users into its ecosystem. Google is at its heart an advertising business, but it’s gradually expanding into new business segments that all require robust data sets and operations to learn human behavior.

Now the challenge will be having the best pitch for developers to not only get them into GCP and other services, but also keep them locked into TensorFlow. But as Facebook increasingly looks to challenge that with alternate frameworks like PyTorch, there may be more difficulty than originally thought. Facebook unveiled a new version of PyTorch at its main annual conference, F8, just last month. We’ll have to see if Google is able to respond adequately to stay ahead, and that starts with a new generation of hardware.”