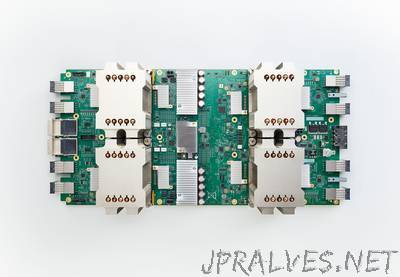

“We’re excited to announce that our second-generation Tensor Processing Units (TPUs) are coming to Google Cloud to accelerate a wide range of machine learning workloads, including both training and inference. We call them Cloud TPUs, and they will initially be available via Google Compute Engine. We’ve witnessed extraordinary advances in machine learning (ML) over the past few years. Neural networks have dramatically improved the quality of Google Translate, played a key role in ranking Google Search results and made it more convenient to find the photos you want with Google Photos. Machine learning allowed DeepMind’s AlphaGo program to defeat Lee Sedol, one of the world’s top Go players, and also made it possible for software to generate natural-looking sketches. These breakthroughs required enormous amounts of computation, both to train the underlying machine learning models and to run those models once they’re trained (this is called “inference”). We’ve designed, built and deployed a family of Tensor Processing Units, or TPUs, to allow us to support larger and larger amounts of machine learning computation, first internally and now externally. While our first TPU was designed to run machine learning models quickly and efficiently—to translate a set of sentences or choose the next move in Go—those models still had to be trained separately. Training a machine learning model is even more difficult than running it, and days or weeks of computation on the best available CPUs and GPUs are commonly required to reach state-of-the-art levels of accuracy. Research and engineering teams at Google and elsewhere have made great progress scaling machine learning training using readily-available hardware. However, this wasn’t enough to meet our machine learning needs, so we designed an entirely new machine learning system to eliminate bottlenecks and maximize overall performance. At the heart of this system is the second-generation TPU we’re announcing today, which can both train and run machine learning models.”