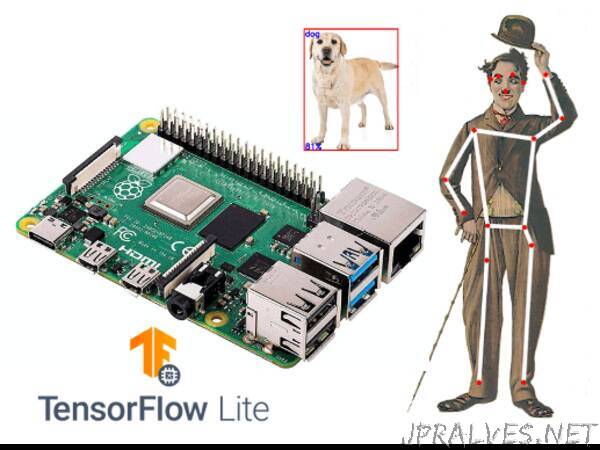

“Image Recognition, Object Detection and Pose Estimation using Tensorflow Lite on a Raspberry Pi

Introduction

What is Edge (or Fog) Computing?

Gartner defines edge computing as: “a part of a distributed computing topology in which information processing is located close to the edge — where things and people produce or consume that information.”

In other words, edge computing brings computation (and some data storage) closer to the devices where it’s data are being generated or consumed (especially in real-time), rather than relying on a cloud-based central system far away. With this approach, data does not suffer latency issues, reducing the amount of cost in transmission and processing. In a way, it is a kind of “return to the recent past, ” where all the computational work was done locally on a desktop and not in the cloud.

Edge computing was developed due to the exponential growth of IoT devices connected to the internet for either receiving information from the cloud or delivering data back to the cloud. And many Internet of Things (IoT) devices generate enormous amounts of data during their operations.

Edge computing provides new possibilities in IoT applications, particularly for those relying on machine learning (ML) for tasks such as object and pose detection, image (and face) recognition, language processing, and obstacle avoidance. Image data is an excellent addition to IoT, but also a significant resource consumer (as power, memory, and processing). Image processing “at the Edge”, running classics AI/ML models, is a great leap!

Machine Learning can be divided into two separated process: Training and Inference, as explained in Gartner Blog:

Training: Training refers to the process of creating a machine learning algorithm. Training involves using a deep-learning framework (e.g., TensorFlow) and training dataset (see the left-hand side of the above figure). IoT data provides a source of training data that data scientists and engineers can use to train machine learning models for various cases, from failure detection to consumer intelligence.

Inference: Inference refers to the process of using a trained machine-learning algorithm to make a prediction. IoT data can be used as the input to a trained machine learning model, enabling predictions that can guide decision logic on the device, at the edge gateway, or elsewhere in the IoT system (see the right-hand side of the above figure).

TensorFlow Liteis an open-source deep learning framework that enables on-device machine learning inference with low latency and small binary size. It is designed to make it easy to perform machine learning on devices, “at the edge” of the network, instead of sending data back and forth from a server.

Performing machine learning on-device can help to improve:

Latency: there’s no round-trip to a server

Privacy: no data needs to leave the device

Connectivity: an Internet connection isn’t required

Power consumption: network connections are power-hungry

TensorFlow Lite (TFLite) consists of two main components:

The TFLite converter, which converts TensorFlow models into an efficient form for use by the interpreter, and can introduce optimizations to improve binary size and performance.

The TFLite interpreter runs with specially optimized models on many different hardware types, including mobile phones, embedded Linux devices, and microcontrollers.”