“A UT Dallas professor hopes to provide a voice to individuals who have no larynx and are unable to produce vocal sounds on their own.

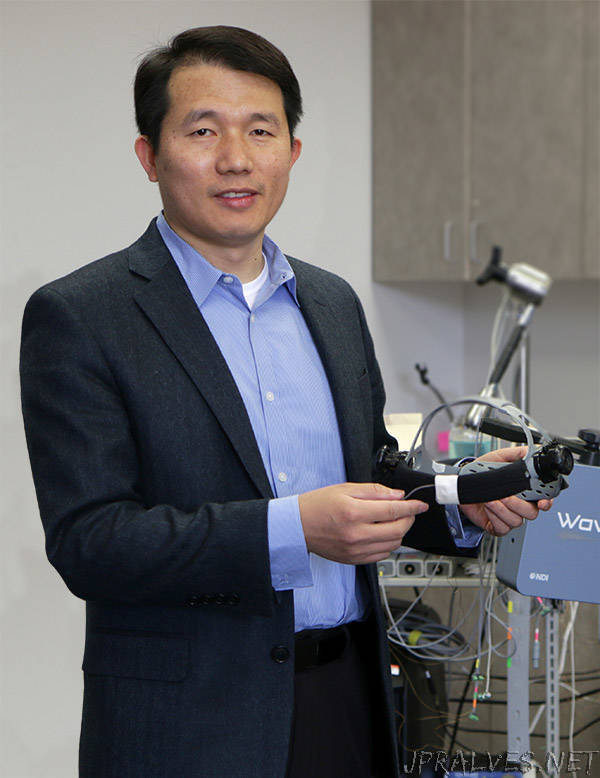

Dr. Jun Wang, assistant professor of bioengineering in the Erik Jonsson School of Engineering and Computer Science, is developing a silent speech processor in which a person’s lips and tongue movements will be recognized by a sensor and then translated into spoken words.

“I hope this project can improve the quality of life for those who don’t have a larynx. There are so many of these patients who won’t talk or go out in public because they feel self-conscious,” said Wang, also an assistant professor of communication sciences and disorders in the School of Behavioral and Brain Sciences.

Currently, people without a larynx can either mouth words with no sound, speak in a hoarse whisper through esophageal speech or a voice prosthesis, or use an external, hand-held device — an electrolarynx — that creates a mechanical sounding voice.

Wang is using a commercial device with wired sensors to collect the tongue and lip motion data of people with a voice — and those without a voice — for algorithm development. The data will be used to train a machine-learning algorithm to predict what patients are trying to say, and then the system will produce the words and sounds.

Wang eventually plans to develop a small, wireless device that resembles a Bluetooth headset, which will be worn by patients and feature sensors that identify a person’s lip and tongue movements. The device will then translate them into speech.

“We’ve made progress, but it will still take some time to reach the final step. When you design small hardware, it must be very simple and reliable, include computational power and good speakers that can play a sound,” he said.

Wang, who is based at UT Dallas’ Callier Center for Communication Disorders, is collaborating with a professor at Georgia Tech’s College of Engineering to develop the hardware for the project.

Plano businessman Fred Venners is one patient who hopes that Wang’s research becomes reality. He had his larynx removed as a result of a cancer diagnosis in 2014 and now uses a voice prosthesis device that allows him to speak in a robotic sounding, electronic voice.

Recently, Venners volunteered for Wang’s project by having his lips and tongue connected to a computer that captures movement information.

“We place two small sensors on a person’s tongue and two small sensors on the lips,” Wang said. “So they’re actually wired, but there’s no pain. It just feels strange.”

Venners is hopeful the project will yield positive results.

“I think it will help people in situations like me, unless they have the ‘poor pitiful me’ thinking,” he said. “If it works, it will be a wonderful thing.”

Wang already has developed a software model in which tongue and lip movements, captured with wired sensors, are translated to vocal sounds. The next step is to upgrade the software to track movements through wireless sensors as well as to build the wireless sensor device.

“The algorithms that we already have developed demonstrate the feasibility of this project,” he said. “So I believe it is very likely that we will have a working prototype within a few years,” he said.

The latest algorithm that predicts speech from tongue and lips movement for non-specific people has been recently published in IEEE/ACM Transactions on Audio, Speech, and Language Processing.

The research is supported by the National Institutes of Health and by the American Speech-Language-Hearing Foundation.”