“FAU scientists are working on a collaborative project to improve computer processing power.

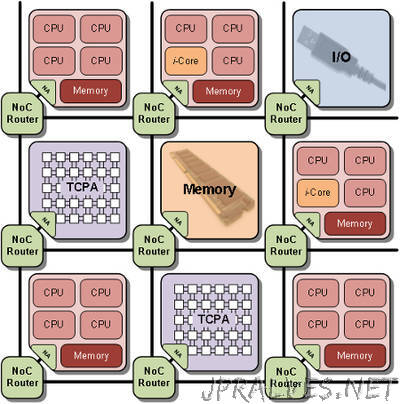

Computers require an increasing amount of processing power to ensure that demanding programs run smoothly. Current technology will not able to keep up for long, and a new concept is needed in the long term: Together with their partners in the SFB/Transregio 89 collaborative project “Invasive Computing”, computer scientists at Friederich-Alexander-Universität Erlangen-Nürnberg (FAU) are currently developing a method to distribute processing power to programmes based on their needs which will enable computers to cope with future processing requirements.

Everyone will be familiar with playing a video on their computer that keeps pausing every few seconds and just won’t buffer properly. These stops and starts are due to the operating system architecture and other applications running in the background. In today’s multi-core processors, operating systems distribute processing time and resources (e.g. memory) to applications without accurate information regarding actual requirements. That is to say, processors run multiple tasks at once, and that in turn means there is competition for shared resources. This can cause unpredictable delays and frequent short interruptions, as is the case with jerky videos. As processing power requirements increase, multi-core process technology is reaching its limits. While it may be feasible to keep integrating more and more cores, even up to several hundred, this is inefficient, since it increases competition while slowing down processing speed overall.”