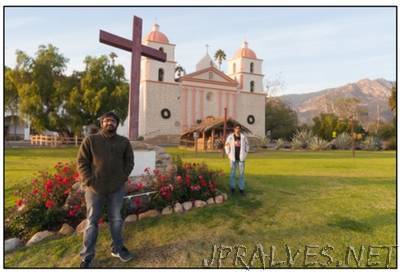

“UCSB and NVIDIA researchers develop a new technique that enables photographers to adjust image compositions after capture. When taking a picture, a photographer must typically commit to a composition that cannot be changed after the shutter is released. For example, when using a wide-angle lens to capture a subject in front of an appealing background, it is difficult to include the entire background and still have the subject be large enough in the frame. Positioning the subject closer to the camera will make it larger, but unwanted distortion can occur. This distortion is reduced when shooting with a telephoto lens, since the photographer can move back while maintaining the foreground subject at a reasonable size. But this causes most of the background to be excluded. In each case, the photographer has to settle for a suboptimal composition that cannot be modified later. As described in a technical paper to be presented July 31 at the ACM SIGGRAPH 2017 conference, UC Santa Barbara Ph.D. student Abhishek Badki and his advisor Pradeep Sen, a professor in the Department of Electrical and Computer Engineering, along with NVIDIA researchers Orazio Gallo and Jan Kautz, have developed a new system that addresses this problem. Specifically, it allows photographers to compose an image post-capture by controlling the relative positions and sizes of objects in the image. Computational Zoom, as the system is called, allows photographers the flexibility to generate novel image compositions — even some that cannot be captured by a physical camera — by controlling the sense of depth in the scene, the relative sizes of objects at different depths and the perspectives from which the objects are viewed.”