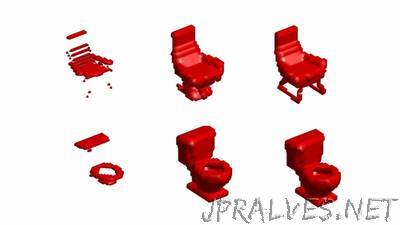

“Autonomous robots can inspect nuclear power plants, clean up oil spills in the ocean, accompany fighter planes into combat and explore the surface of Mars. Yet for all their talents, robots still can’t make a cup of tea. That’s because tasks such as turning the stove on, fetching the kettle and finding the milk and sugar require perceptual abilities that, for most machines, are still a fantasy. Among them is the ability to make sense of 3-D objects. While it’s relatively straightforward for robots to “see” objects with cameras and other sensors, interpreting what they see, from a single glimpse, is more difficult. Duke University graduate student Ben Burchfiel says the most sophisticated robots in the world can’t yet do what most children do automatically, but he and his colleagues may be closer to a solution. Burchfiel and his thesis advisor George Konidaris, now an assistant professor of computer science at Brown University, have developed new technology that enables machines to make sense of 3-D objects in a richer and more human-like way. A robot that clears dishes off a table, for example, must be able to adapt to an enormous variety of bowls, platters and plates in different sizes and shapes, left in disarray on a cluttered surface. Humans can glance at a new object and intuitively know what it is, whether it is right side up, upside down or sideways, in full view or partially obscured by other objects. Even when an object is partially hidden, we mentally fill in the parts we can’t see. Their robot perception algorithm can simultaneously guess what a new object is, and how it’s oriented, without examining it from multiple angles first. It can also “imagine” any parts that are out of view. A robot with this technology wouldn’t need to see every side of a teapot, for example, to know that it probably has a handle, a lid and a spout, and whether it is sitting upright or off-kilter on the stove. The researchers say their approach, which they presented July 12 at the 2017 Robotics: Science and Systems Conference in Cambridge, Massachusetts, makes fewer mistakes and is three times faster than the best current methods. This is an important step toward robots that function alongside humans in homes and other real-world settings, which are less orderly and predictable than the highly controlled environment of the lab or the factory floor, Burchfiel said. With their framework, the robot is given a limited number of training examples, and uses them to generalize to new objects.”