“CPQM’s Laboratory for Quantum Information Processing has collaborated with the CDISE supercomputing team “Zhores” to emulate Google’s quantum processor. Reproducing noiseless data following the same statistics as Google’s recent experiments, the team was able to point to a subtle effect lurking in Google’s data. This effect, called a reachability deficit, was discovered by the Skoltech team in its past work. The numerics confirmed that Google’s data was on the edge of a so-called, density-dependent avalanche, which implies that future experiments will require significantly more quantum resources to perform quantum approximate optimization. The results are published in the field’s leading journal Quantum.

From the early days of numerical computing, quantum systems have appeared exceedingly difficult to emulate, though the precise reasons for this remain a subject of active research. Still, this apparently inherent difficulty of a classical computer to emulate a quantum system prompted several researchers to flip the narrative.

Scientists such as Richard Feynman and Yuri Manin speculated in the early 1980s that the unknown ingredients which seem to make quantum computers hard to emulate using a classical computer could themselves be used as a computational resource. For example, a quantum processor should be good at simulating quantum systems, since they are governed by the same underlying principles.

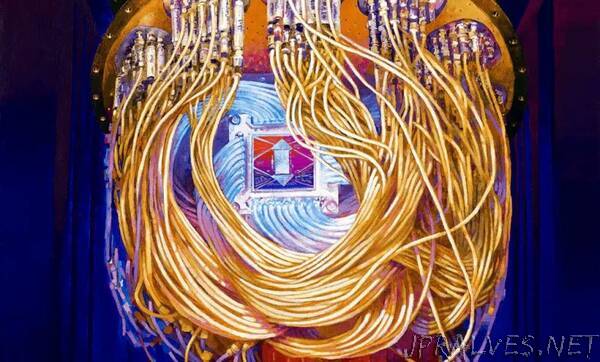

Such early ideas eventually led to Google and other tech giants creating prototype versions of the long-anticipated quantum processors. These modern devices are error-prone, they can only execute the simplest of quantum programs and each calculation must be repeated multiple times to average out the errors in order to eventually form an approximation.

Among the most studied applications of these contemporary quantum processors is the quantum approximate optimization algorithm, or QAOA (pronounced “kyoo-ay-oh-AY”). In a series of dramatic experiments, Google used its processor to probe QAOA’s performance using 23 qubits and three tunable program steps.

In a nutshell, QAOA is an approach wherein one aims to approximately solve optimization problems on a hybrid setup consisting of a classical computer and a quantum co-processor. Prototypical quantum processors such as Google’s Sycamore are currently restricticted to performing noisy and limited operations. Using a hybrid setup, the hope is to alleviate some of these systematic limitations and still recover quantum behavior to take advantage of, making approaches such as QAOA particularly attractive.

Skoltech scientists have made a series of recent discoveries related to QAOA, for example see the write-up here. Prominent among them being an effect that fundamentally limits the applicability of QAOA. They show that the density of an optimization problem — that is, the ratio between its constraints and variables — acts as a major barrier to achieving approximate solutions. Additional resources, in terms of operations run on the quantum co-processor, are required to overcome this performance limitation. These discoveries were done using pen and paper and very small emulations. They wanted to see if the effect they recently discovered manifested itself in Google’s recent experimental study.

Skoltech’s quantum algorithms lab then approached the CDISE supercomputing team led by Oleg Panarin for the significant computing resources required to emulate Google’s quantum chip. Quantum laboratory member, Senior Research Scientist Dr. Igor Zacharov worked with several others to transform the existing emulation software into a form that permits parallel computation on Zhores. After several months, the team managed to create an emulation that outputs data with the same statistical distributions as Google and showed a range of instance densities at which QAOA performance sharply degrades. They further revealed Google’s data to lie at the edge of this range beyond which the current state of the art would not suffice to produce any advantage.

The Skoltech team originally found that reachability deficits — a performance limitation induced by a problem’s constraint-to-variable ratio — were present for a kind of problem called maximum constraint satisfiability. Google, however, considered the minimization of graph energy functions. Since these problems are in the same complexity class, it gave the team conceptual hope that the problems, and later the effect, could be related. This intuition turned out to be correct. The data was generated and the findings clearly showed that reachability deficits create a type of an avalanche effect, placing Google’s data on the edge of this rapid transition beyond which longer, more powerful QAOA circuits become a necessity.”