“Remember how, a few years ago, the chatter was that waferscale systems wouldn’t stand a chance? The expense, complexity, software stack required, and litany of other barriers went on. Yet Cerebras Systems propelled ahead anyway.

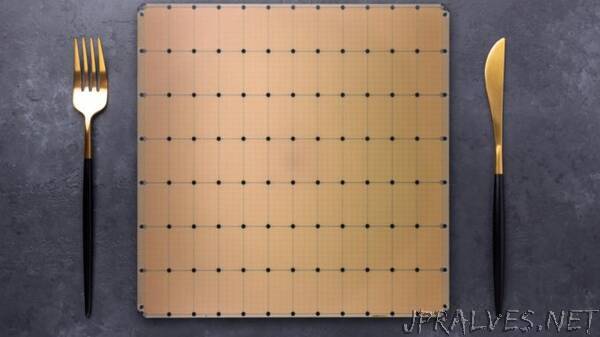

While they’re not replacing GPUs, CPUs, or even clearly outpacing the other AI chip upstarts quite yet, things do appear to be looking up for Cerebras. And on the heels of some recent wins in national labs and pharma companies, including GlaxoSmithKline, waferscale AI systems maker, Cerebras, is unveiling its new, second machine, the CS-2.

A generation-hopping die shrink, microarchitecture tweaks, big memory and memory bandwidth improvements, and what appears to be a pretty plug-and-play approach to moving models from a CS-1 and even GPUs compiler-wise are all noteworthy. But what we’ll get to after the system view is where the business goes from here. All the innovation in the world means little if no one is willing to take a chance.

From a high-level view, the general architecture is the same, despite a generation-skipping shrink from 16nm with the CS-1 to 7nm. What is most notable is that Cerebras was also able to keep the power consumption for its system the same (23 kW) while adding quite a bit of memory and fabric bandwidth.

The CS-2 moves from around 400,000 cores to 850,000, makes an on-chip memory jump from 18G to 40GB, and increases memory bandwidth from 9PB to 20PB with over double the fabric. As Cerebras co-founder and CEO, Andrew Feldman, tells us, all of this translates into a system with a redesigned chassis and power/cooling tweaks that is roughly 20% to 25% more expensive than its predecessor.

“With TSMC, we skipped the step from 16nm to 10nm and went right to seven, which is where you see the improvements in transistor density and power efficiency. Also, with the second generation of anything, you have more experience. The first system was all vision. After thousands of meetings and thousands of hours deployed, there’s been a lot of feedback, which we’ve looped into improvements in the microarchitecture, hardware/software codesign,” Feldman explains.

The addition of all the memory and bandwidth is the real story here but there’s not a ton of re-engineering that had to go into the CS-2. “The memory is all still SRAM but this time around we used more aggressive circuit design techniques. What we showed was that we could take waferscale to any fab geometry, any process node, and do it all in 18 months—faster than other chipmakers with a normal-sized chip from one generation to the next,” he adds.

“There were three ways we could have gone. Three buckets of change: a straight shrink, which changes nothing; microarchitecture improvements, which take advantage of the shrink and changes design elements but the same architecture; or a complete architecture shift. We chose the second. It took some learning and improvements in the core and fabric but it’s the same dataflow architecture with the major blocks the same but with improved handling of data.”

It’s easy to think that doubling transistors and memory and bandwidth translates into a straight doubling of performance but this is so often not the case for straight chipmakers, Feldman says. “We don’t sell chips. We are a systems company. We’re making improvements in the enclosure, making cooling, power delivery, everything more efficient, making sure our compiler stack can compile anything that’s running on a CS-1 or a GPU.” He says that out of the gate, it’s possible to compile to the 850k core CS-2 with a single line of code and a TensorFlow or PyTorch model.

All of this adds quite a bit of capability within the system but we touched on topic that doesn’t get the attention it deserves, in part because we have decades of training that storage is something entirely separate. With a system that can do what the CS-2 purports, this isn’t a secondary consideration in performance—and certainly total cost of the system.

Feldman says that to get the true performance out of the system the upstream servers (7-8) need to have new Epyc or latest Intel processors. High performance storage with a WekaIO/NetApp file system and server is also needed.

Along with a 100GbE switch. “The data pipeline definitely matters. If you can’t feed the machine data quickly, you can’t work on data you don’t have. We do have recommendations for the upstream servers and storage that hands off. We’re taking in 12x100GbE but most of our customers already have servers that can fill that pipe. They have fast Epyc or Intel servers ith 2 100GbE NICs and hang a file system off the group of servers and the data moves to the upstream servers for pre-processing and streams data to use over 100GbE. We have to ensure the bottlenecks aren’t upstream.” So in other words, yes, the Cerebras CS-1 would cost around $2.5 million (or so) but the infrastructure around it adds up and is something to consider if your high-test servers are in use for production work and can’t be lent to the training process.

There are several companies and institutions who have figured out the data pipeline for their CS-1 and several lined up for the CS-2, Feldman says. Recall that Cerebras has CS-1s at Argonne, LLNL, Edinburgh, with a system doing drug discovery work now at GlaxoSmithKline.

Feldman says that they will have announcements in the coming months of use cases in heavy manufacturing, biotech, pharma, military/intelligence. “We’re being used for important work in cancer and Covid research, new materials discovery, brain injuries in soldiers, and as a cloud for NSF researchers.”

But of course, they have a heavy job of getting new converts—particularly those who have made big investments in GPUs, an even tougher task with the A100 in the lineup and OEMs who are quick to put those in the big customer’s hands. Cerebras, on the other hand, will go it alone for now, being its own system maker without OEMs fanning out for them.

When asked about how Cerebras will pop in to replace GPUs and what tips the scales for customers, Feldman says it’s the companies that are struggling with getting efficient training runs on their current clusters, which limits what they can do. If a training run takes almost a month and Cerebras can train the same workload in a few hours, it changes what companies think it is possible. Instead of waiting, especially for a run that never converges, they can keep pace with their own ideas and train more—and ideally, be more productive.

But what workloads are worth the investment in something new? Drug discovery and materials sciences, areas where language models are taking deep hold are the sweet spot for high-ROI areas for enterprise and research, perhaps enough to get them to make the switch.

“At GSK we are applying machine learning to make better predictions in drug discovery, so we are amassing data – faster than ever before – to help better understand disease and increase success rates,” said Kim Branson, SVP, AI/ML, GlaxoSmithKline. “Last year we generated more data in three months than in our entire 300-year history. With the Cerebras CS-1, we have been able to increase the complexity of the encoder models that we can generate, while decreasing their training time by 80x. We eagerly await the delivery of the CS-2 with its improved capabilities so we can further accelerate our AI efforts and, ultimately, help more patients.”

“As an early customer of Cerebras solutions, we have experienced performance gains that have greatly accelerated our scientific and medical AI research,” said Rick Stevens, Argonne National Laboratory Associate Laboratory Director for Computing, Environment and Life Sciences. “The CS-1 allowed us to reduce the experiment turnaround time on our cancer prediction models by 300x over initial estimates, ultimately enabling us to explore questions that previously would have taken years, in mere months. We look forward to seeing what the CS-2 will be able to do with more than double that performance.””