“Team makes multiple processors with 3D memory act like one big chip

What does an ideal neural network chip look like? The most important part is to have oodles of memory on the chip itself, say engineers. That’s because data transfer (from main memory to the processor chip) generally uses the most energy and produces most of the system lag—even compared to the AI computation itself.

Cerebras Systems solved these problems, collectively called the memory wall, by making a computer consisting almost entirely of a single, large chip containing 18 gigabytes of memory. But researchers in France, Silicon Valley, and Singapore have come up with another way.

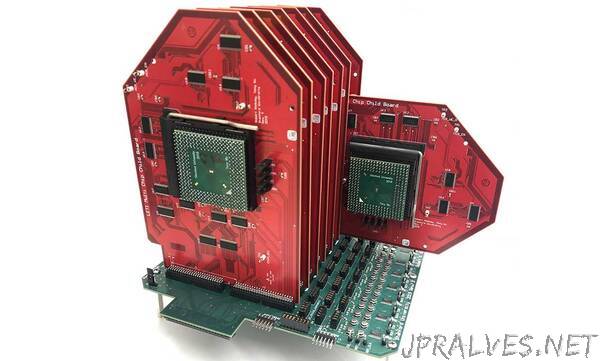

Called Illusion, it uses processors built with resistive RAM memory in a 3D stack built above the silicon logic, so it costs little energy or time to fetch data. By itself, even this isn’t enough, because neural networks are increasingly too large to fit in one chip. So the scheme also requires multiple such hybrid processors and an algorithm that both intelligently slices up the network among the processors and also knows when to rapidly turn processors off when they’re idle.

In tests, an eight-chip version of Illusion came within 3-4 percent of the energy use and latency of a “dream” processor that had all the needed memory and processing on one chip.

The research team—which included contributions from the French research lab CEA-Leti, Facebook, Nanyang Technological University in Singapore, San Jose State University, and Stanford University—were driven by the fact that the size of neural networks is only increasing. “This dream chip is never available in some sense, because it’s a moving target,” says Subhasish Mitra, the Stanford electrical engineering and computer science professor who led the research. “The neural nets get bigger and bigger faster than Moore’s Law can keep up,” he says.

So instead they sought to design a system that would create the illusion of a single processor with a large amount of on-chip memory (hence the project name) even though it was actually made up of multiple hybrid processors. That way Illusion could easily be expanded to accommodate growing neural networks.

Such a system needs three things, explains Mishra. The first is a lot of memory on the chip that can be accessed quickly and with little energy consumption. That’s where the 3D-integrated RRAM comes in. They chose RRAM, “because it is dense, 3D-integrated, and can be accessed quickly from a powered-down state and because it doesn’t lose its data when the power is off,” says H.-S. Philip Wong, a Stanford professor of electrical engineering and a collaborator on the project.

But RRAM does have a drawback. Like Flash memory, it wears out after being overwritten too many times. In Flash, software keeps track of how many overwrites have occurred to each block of memory cells and tries to keep that number even across all the cells in the chip. Stanford theoretical computer scientist Mary Wootters led the team’s effort to invent something similar for RRAM. The result, called Distributed Endurer, has the added burden of ensuring that wear from writing is even across multiple chips.

Even with Endurer and hybrid RRAM and processor chips, powerful neural nets, such as the natural language processors in use today, are still too large to fit in one chip. But using multiple hybrid chips means passing messages between them, eating up energy and wasting time.

The Illusion team’s solution, the second component of their technology, was to chop up the neural network in a way that minimizes message passing. Neural networks are, essentially, a set of nodes where computing happens and the edges that connect them. Each network will have certain nodes, or whole layers of nodes, that have tons of connections.

But there will also be choke points in the network—places where few messages must be passed between nodes. Dividing up a large neural network at these choke points and mapping each part on a separate chip ensures that a minimal amount of data needs to be sent from one chip to another. The Illusion mapping algorithm “automatically identifies the ideal places to cut a neural net to minimize these messages,” says Mitra.

But chopping things up like that has its own consequences. Inevitably, one chip will finish its business before another, stalling the system and wasting power. Other multichip systems, attempting to run very large neural networks focus on dividing up the network in a way that keeps all chips continually busy, but that comes at the expense of transferring more data among them.

Instead, in a third innovation, the Illusion team decided to engineer the hybrid processors and their controlling algorithm so that the chip can be turned off and on quickly. So when the chip is waiting for work, it isn’t consuming any power. CEA-Leti’s 3D RRAM technology was key to making 3D SoCs that can efficiently turn off completely within a few clock cycles and restart without losing data, says Mishra.

The team built an eight-chip version of Illusion and took it for a test drive on three deep neural networks. These networks were nowhere near the size of those that are currently stressing today’s computer systems, because each of the Illusion prototypes only had only 4 kilobytes of RRAM reserved for the neural network data. The “dream chip” they tested it against was really a single Illusion chip that mimicked the execution of the full neural network.

The 8-chip Illusion system was able to run the neural networks within 3.5 percent of the dream chip’s energy consumption and with 2.5 percent of its execution time. Mitra points out that the system scales up well. Simulations of a 64-chip Illusion with a total of 4 gigabytes of RRAM were just as close to the ideal.

“We are already underway with a new more capable prototype,” says Robert Radway, the Stanford University graduate student who was first author on a paper describing Illusion that appeared this week in Nature Electronics. Compared to the prototypes, the next generation of chips will have orders of magnitude more memory and ability to compute. And while the first generation was tested on inferencing, the next generation will be used to train them, which is a much more demanding task.

“Overall, we feel Illusion has profound implications for future technologies,” says Radway. “It opens up a large design space for technology innovations and creates a new scaling path for future systems.””