“Quantum computers may one day solve algorithmic problems which even the biggest supercomputers today can’t manage. But how do you test a quantum computer to ensure it is working reliably? Depending on the algorithmic task, this could be an easy or a very difficult certification problem. An international team of researchers has taken an important step towards solving a difficult variation of this problem, using a statistical approach developed at the University of Freiburg. The results of their study are published in the latest edition of Nature Photonics.

Their example of a difficult certification problem is sorting a defined number of photons after they have gone through a defined arrangement of several optical elements. The arrangement provides each photon with a number of transmission paths - depending on whether the photon is reflected or transmitted by an optical element. The task is to predict the probability of photons leaving the arrangement at defined points, for a given positioning of the photons at the entrance to the arrangement. With increasing size of the optical arrangement and increasing numbers of photons sent on their way, the number of possible paths and distributions of the photons at the end rises steeply as a result of the uncertainty principle which underlies quantum mechanics - so that there can be no prediction of the exact probability using the computers available to us today. Physical principles say that different types of particle - such as photons or electrons - should yield differing probability distributions. But how can scientists tell these distributions and differing optical arrangements apart when there is no way of making exact calculations?

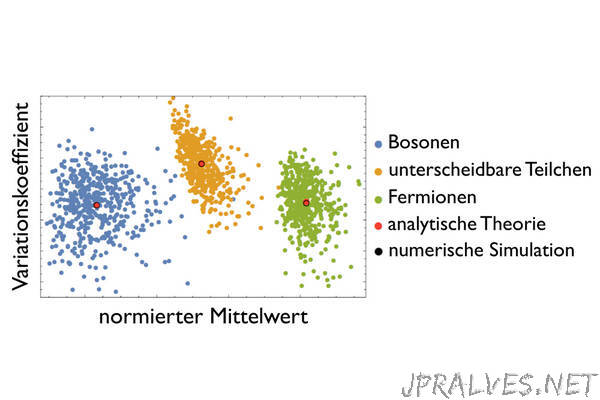

An approach developed in Freiburg by researchers from Rome, Milan; Redmond, USA; Paris, and Freiburg now makes it possible for the first time to identify characteristic statistical signatures across unmeasurable probability distributions. Instead of a complete “fingerprint,” they were able to distill the information from data sets which were reduced to make them usable. Using that information, they were able to discriminate various particle types and distinctive features of optical arrangements. The team also showed that this distillation process can be improved, drawing upon established techniques of machine learning, whereby physics provides the key information on which data set should be used to seek the relevant patterns. And because this approach becomes more accurate for bigger numbers of particles, the researchers hope that their findings take us a key step closer to solving the certification problem.”