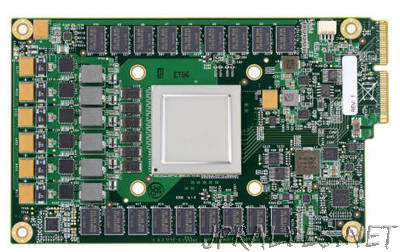

“We’ve been using compute-intensive machine learning in our products for the past 15 years. We use it so much that we even designed an entirely new class of custom machine learning accelerator, the Tensor Processing Unit. Just how fast is the TPU, actually? Today, in conjunction with a TPU talk for a National Academy of Engineering meeting at the Computer History Museum in Silicon Valley, we’re releasing a study that shares new details on these custom chips, which have been running machine learning applications in our data centers since 2015.”