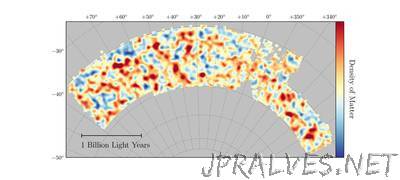

“The Ohio Supercomputer Center played a critical role in helping researchers reach a milestone mapping the growth of the universe from its infancy to present day. The new results released Aug. 3 confirm the surprisingly simple but puzzling theory that the present universe is composed of only 4 percent ordinary matter, 26 percent mysterious dark matter, and the remaining 70 percent in the form of mysterious dark energy, which causes the accelerating expansion of the universe. The findings from researchers at The Ohio State University and their colleagues from the Dark Energy Survey (DES) collaboration are based on data collected during the first year of the DES, which covers more than 1,300 square degrees of the sky or about the area of 6,000 full moons. DES uses the Dark Energy Camera mounted on the Blanco 4m telescope at the Cerro Tololo Inter-American Observatory high in the Chilean Andes. According to Klaus Honscheid, Ph.D., professor of physics and leader of the Ohio State DES group, OSC was critical to getting the research done in a timely manner. His computational specialists – Michael Troxel and Niall MacCrann, postdoctoral fellows – used an estimated 300,000 core hours on OSC’s Ruby Cluster through a condo arrangement between OSC and Ohio State’s Center of Cosmology and Astro-Particle Physics (CCAPP). The team took advantage of OSC’s Anaconda environment for standard work, Anaconda, an open-source package of the Python and R programming languages for large-scale data processing, predictive analytics and scientific computing. The group then used its own software to evaluate the multi-dimensional parameter space using Markov Chain Monte Carlo techniques, which is used to generate fair samples from a probability. The team also ran validation code, or null tests, for object selection and fitting code to extract information about objects in the images obtained by simultaneously fitting the same object in all available exposures of the particular object. The bulk of the team’s 4 million computational allocations are at the National Energy Research Scientific Computing Center (NERSC), a federal supercomputing facility in California. However, due to a backlog at NERSC, OSC’s role became key. According to Honscheid, for the next analysis round the team is considering increasing the amount of work done through OSC. The total survey will last five years, he said, meaning the need for high performance computing will only increase. In order to collect the data, the team built an incredibly powerful camera for the Blanco 4m telescope. “